Global AI Watch: AI, Food Security, and the Case for Institutional Reform

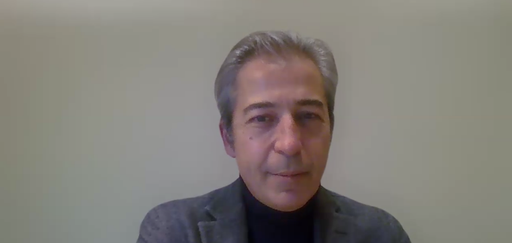

In this interview, B Cavello discusses findings from an Aspen Institute global survey and reflects on what it reveals about the intersection of AI and food systems. Practitioners consistently emphasized institutional capacity, governance, and distributional challenges as central constraints. The conversation explores how AI might support greater transparency, participation, and resilience, and what these insights mean for U.S. state and local policymakers working on food security, land governance, and public-sector capacity.